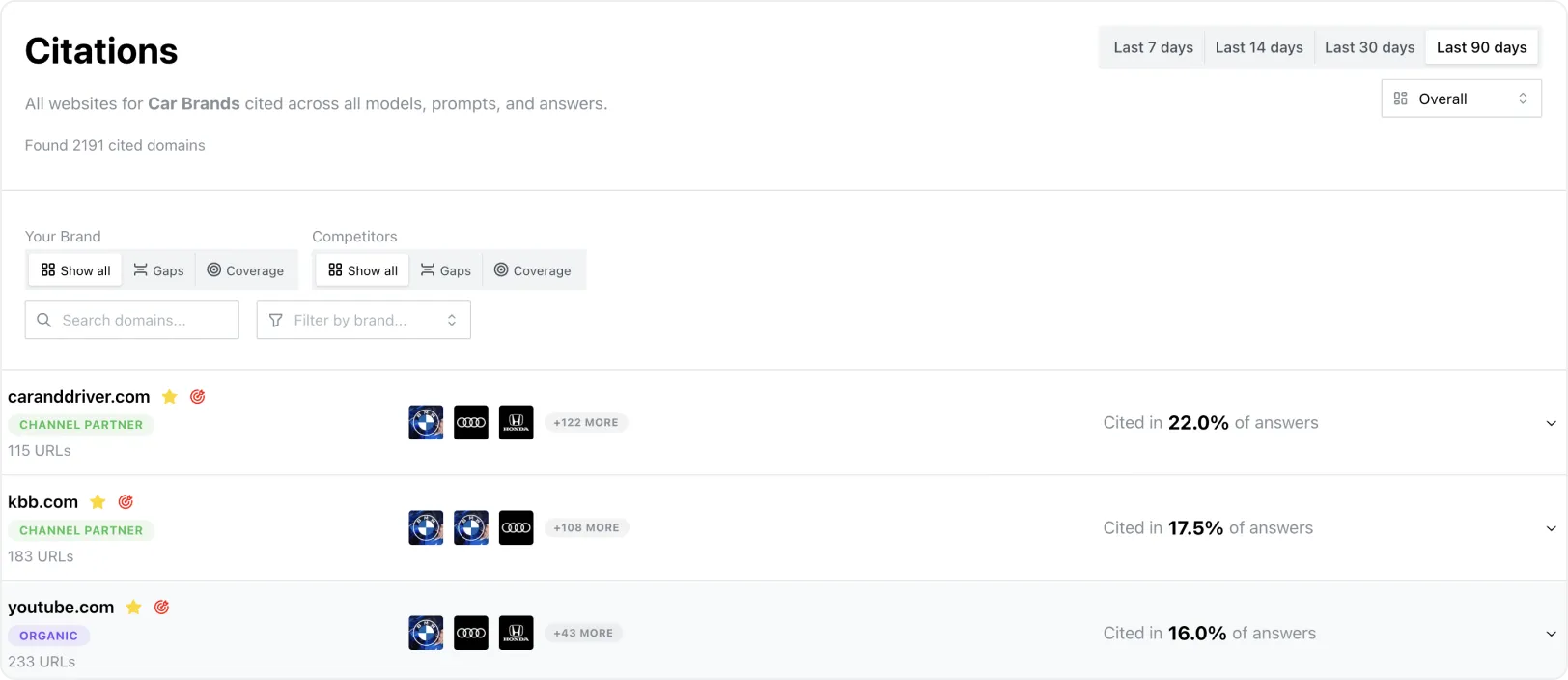

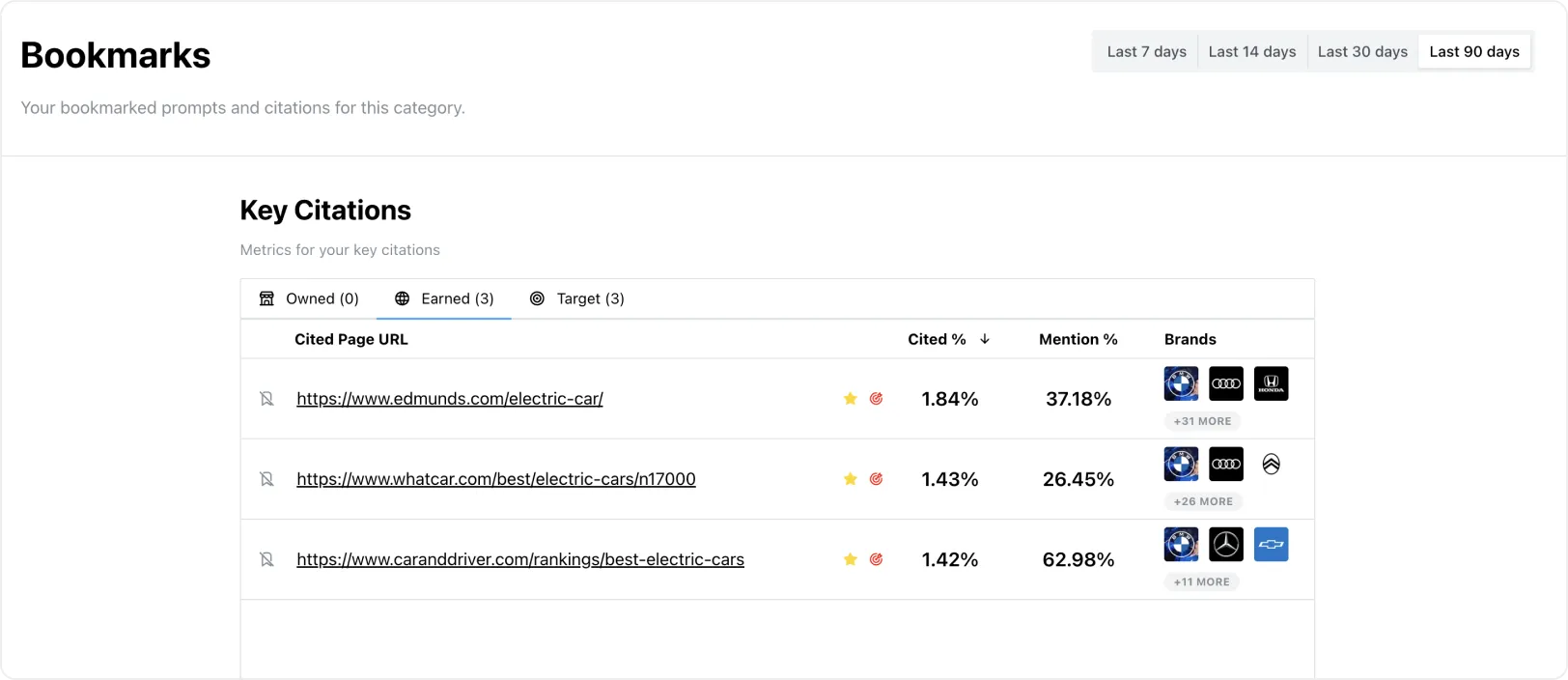

For instance, when we track "Best marketing automation platforms," you'll see which sources each LLM consistently cites, how often your brand appears compared to competitors, and which opportunities you're missing.

What Our Intelligence Reveals:

- Real mention frequency - Which sources get chosen most often by each LLM platform

- Competitive gaps - Specific prompts where competitors get cited but you don't

- Platform preferences - How ChatGPT vs. Gemini vs. Perplexity choose different sources

- High-value sources - Publications and websites that consistently get referenced

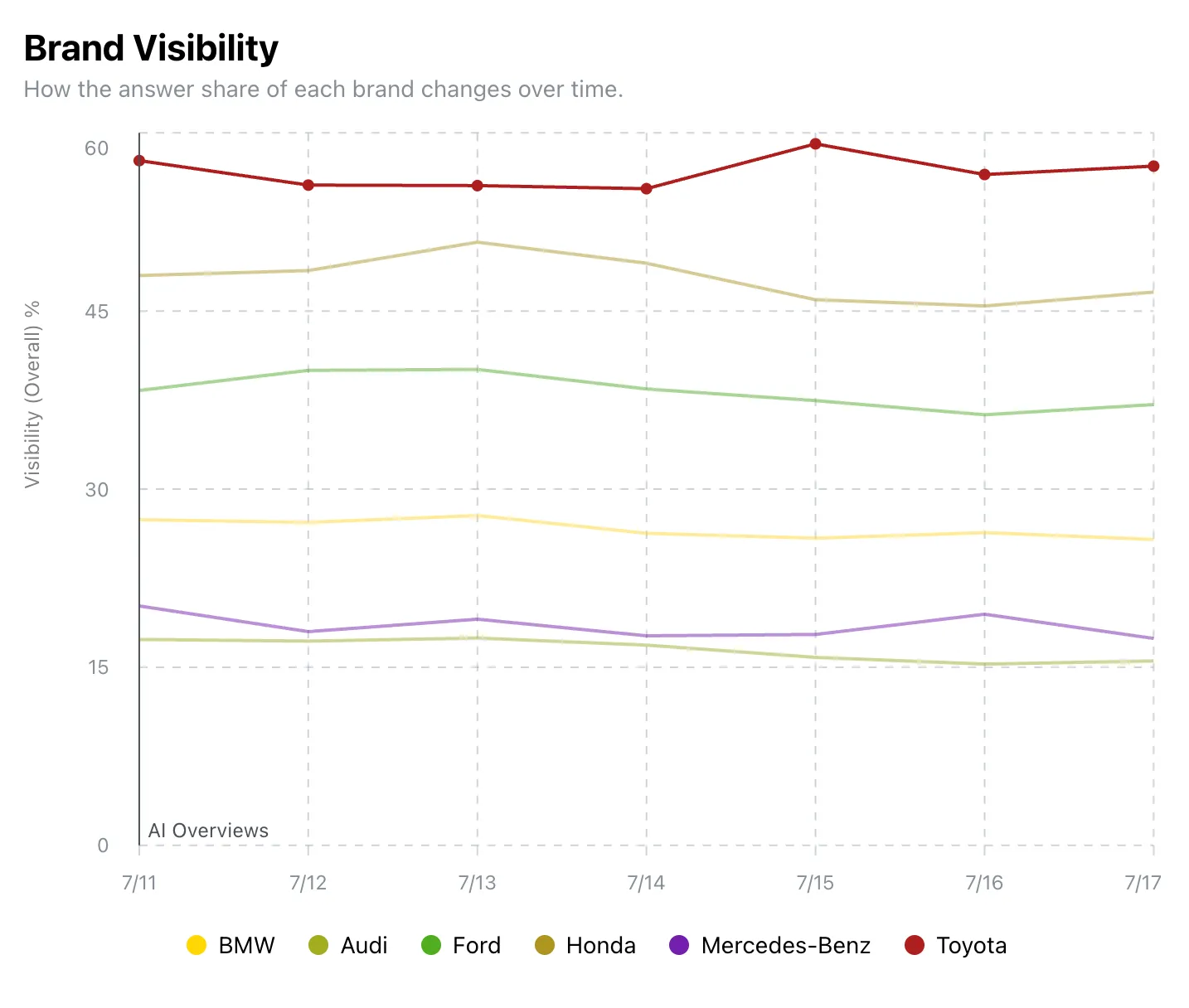

- Trending patterns - Which sources are gaining or losing mention frequency over time

- Brand opportunities - Where you could improve your visibility rates

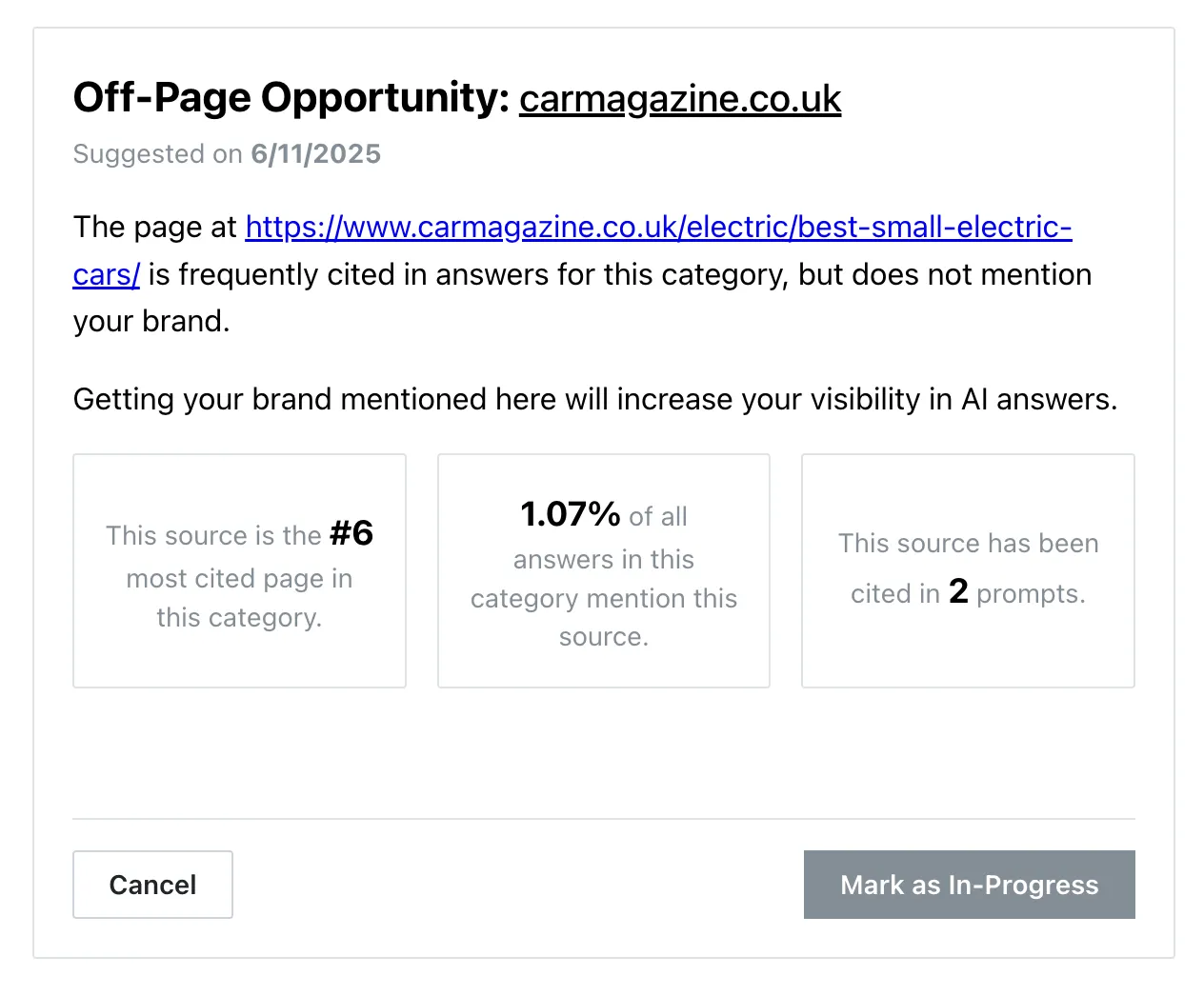

Get Actionable Insights Through Our Action Center

Our Action Center analyzes mention data to show you specific opportunities where you could improve your visibility frequency. Instead of generic advice, you'll see exactly which gaps to target and which high-frequency sources to pursue.

You'll see content gaps where competitors consistently get mentioned, high-value sources that get referenced frequently in your industry, and specific opportunities to increase your visibility based on actual LLM behavior.

Track Your Performance Across All Major Platforms

Monitor how changes affect your mention frequency across LLM platforms. When you create new content or get featured on high-visibility sources, you'll see exactly how it impacts your appearance rates on ChatGPT, Gemini, Perplexity, and Google AI Overviews.

From Invisible to Dominant in Days

One customer used our data to understand which sources get referenced most in their industry and focused on getting featured in those publications. They went from appearing in 22% of relevant responses to 42% in just four days.

Another team analyzed our intelligence to identify the content gaps where competitors dominated mentions and tripled their visibility by targeting those specific opportunities.

Teams with source intelligence see faster results because they focus on what actually drives mentions instead of guessing about LLM preferences.

You'll stop guessing about LLM citations and start making decisions based on actual citation patterns that drive discovery.

.webp)

.png)